The Quantum Hype Train: All Aboard Or Off The Track?

By John Oncea, Editor

Quantum technologies are here! Or are they? Well, they’re on their way! Or are they? Well … they’ll for sure change the world eventually, right? Well, it’s complicated.

Go to the search engine of your choice and look up “What are experts saying about quantum technologies?” Go ahead, I’ll wait.

I just Googled that myself, setting the search to “Past month,” and up popped about 1,070,000 results in 0.44 seconds. Now, I haven’t checked all 1,070,000 results but I’m thinking if I did, I’d end up with 1,070,000 different answers.

Forbes says quantum computing has arrived and we need to prepare for its impact.

The BBC says powerful quantum computers in years not decades.

Microsoft says it made a quantum breakthrough, but Amazon, according to Yahoo, says Microsoft’s scientific paper “doesn’t actually demonstrate” its claims.

So, despite the hype surrounding quantum technologies the most accurate answer when asked if the hype is real just might be ¯\_(ツ)_/¯.

But they don’t pay me to shrug my shoulders so let’s take a look at what quantum technologies are, if they’re here, and, if they’re not, will they ever be?

What Do We Mean By “Quantum Technologies?”

Quantum technologies refer to a class of technology that leverages the principles of quantum mechanics – including quantum entanglement, quantum superposition, and uncertainty principle – to create new capabilities in areas like computing, communication, and sensing, potentially revolutionizing various industries, according to World Economic Forum.

So that’s a definition, but what are quantum technologies? To start, it might be easier (a relative term) to look at one specific quantum technology – quantum computing – and compare it to good old classical computing.

Classical and quantum computing represent two fundamentally different paradigms in information processing, each with its unique principles, capabilities, and challenges, writes Vocal. Let’s start with the basic principles:

- Classical Computing: Relies on bits as the fundamental unit of data, where each bit is definitively either 0 or 1. Computations are performed using logical operations (e.g., AND, OR, NOT) on these bits.

- Quantum Computing: Utilizes quantum bits, or qubits, which, due to the principle of superposition, can represent 0, 1, or both simultaneously. This allows quantum computers to process a vast number of possibilities at once. Additionally, qubits can exhibit entanglement, meaning the state of one qubit can be directly related to the state of another, regardless of distance.

Next up, computational power:

- Classical Computing: Capable of solving a wide array of problems, especially those that can be broken down into sequential logical operations. However, certain complex problems, like factoring large numbers or simulating quantum systems, are computationally intensive and time-consuming for classical systems.

- Quantum Computing: Offers the potential to solve specific problems more efficiently than classical computers. For example, Shor's algorithm enables quantum computers to factor large numbers exponentially faster than the best-known classical algorithms. Similarly, Grover's algorithm provides a quadratic speedup for unstructured search problems.

Each type of computing comes with its challenges:

- Classical Computing: Faces limitations in scaling down components due to physical constraints and energy consumption, which can hinder performance improvements.

- Quantum Computing: Encounters challenges such as qubit instability (decoherence), error rates, and the need for operating conditions like extremely low temperatures. Scaling quantum systems while maintaining qubit coherence and reducing errors remain a significant hurdle.

Finally, a few current applications of each include:

- Classical Computing: Widely used across all sectors, from everyday applications like word processing and internet browsing to complex tasks like data analysis, artificial intelligence, and large-scale simulations.

- Quantum Computing: Currently in experimental and specialized use, with applications in cryptography, optimization problems, and simulating quantum physical processes. As the technology matures, its application scope is expected to broaden.

While classical computing remains the backbone of current technology, quantum computing holds promise for revolutionizing fields that require handling complex computations beyond the reach of classical systems. Ongoing research indicates a rapidly evolving landscape where quantum computing may complement and, in specific domains, surpass classical computing capabilities.

Got it? Feel free to skip ahead to the next section. Still confused? Let’s simplify it a little bit more.

This article is written and read in English. But for a classical computer to understand it has to translate it into binary code, a bunch of 0s and 1s that the computer conglomerates to make something meaningful. When these binary codes are connected, they represent individual symbols or patterns that we call BITS.

So, long story short in a normal computer whatever we instruct it to do, it does that through using binary codes and bits. A quantum computer, on the other hand, works primarily using four key principles of quantum physics. These are:

- Superposition: Quantum states can be in multiple states at once. Suppose your computer can be turned on or off at the same time. It's unlikely, but that’s what superposition is.

- Interference: States of an object can cross its path and interfere with any other particle, i.e., not cancel out or add each other.

- Entanglement: The state of one quantum object can be so deeply tied together that the state of a single quantum object can’t be described without describing the other. For example, if you are a quantum object then chances there is another version of you in the far reaches of the Universe reading an article just like this one.

- Measurement: The disturbance caused by measurement in quantum objects makes it impossible to measure a quantum system without altering its state, which challenges classical intuitions about measurement and reality.

Now, remember bits? In classical computing these bits can be either 0 or 1, not both at the same time but in quantum computing, using the principles of quantum superposition, a bit can be both 0 and 1 at the same time. We indicate it as quantum bit or qubit and, because of this, quantum computers are better at performing complex simulations such as simulating molecular dynamics, turbulence of the wind, or for cryptographic applications.

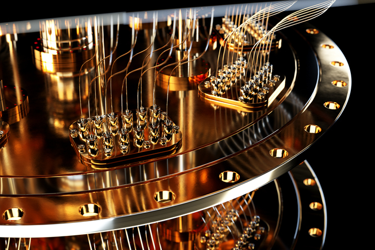

Now, you may be asking how we can have a particle that exists in two states at the same time. This is because quantum physics usually works at a molecular and atomic scale. Therefore, classical bits are made by electric pulses, but quantum bits are made by superconducting particles, trapped ions, diamond NV centers, and photonics.

TLDR: Quantum computers work by using the laws of quantum physics which enable them to perform selected applications at a faster rate.

Current Uses Of Quantum Technologies

Quantum technologies are currently used – or could be used soon – in quantum sensing for precise measurements, quantum computing for cybersecurity and financial modeling, and quantum cryptography for enhanced data security.

Let’s take a closer look at these instances, starting with quantum sensing in which quantum sensors leverage the principles of quantum mechanics for applications that require highly sensitive measurements. Examples of this include:

- Medical Imaging: Quantum sensors are being developed for next-generation medical devices, like MRI scanners, offering improved resolution and faster results.

- Geological Mapping: Quantum sensors can measure magnetic fields and gravity with unprecedented precision, aiding in mapping geological activity and mineral discovery.

- Environmental Monitoring: Quantum sensors can accurately measure atmospheric conditions and pollution levels, contributing to environmental research and monitoring.

- Navigation: Quantum sensors are being used for navigation on moving vehicles, offering improved accuracy and reliability.

Next from our introduction is quantum computing which has the potential to revolutionize cybersecurity by making it easier to spot threats, improve cryptography, and open up new encrypted communication channels. Examples include:

- Financial Modeling: Quantum computing can be used to improve machine learning capabilities, aid in financial modeling, and optimize portfolio allocations.

- Weather Forecasting: Quantum computers could analyze vast amounts of weather data to generate more accurate and detailed weather predictions.

- Materials Science: Quantum computing can be used to simulate and design new materials with specific properties, leading to advancements in various fields.

Finally, quantum cryptography, which uses the principles of quantum mechanics to ensure secure communication and is becoming increasingly important as a way to protect sensitive data from eavesdropping. An example of this is quantum key distribution (QKD), a promising technology that could provide unbreakable encryption, ensuring the confidentiality of communication.

So, The Hype Is Real, Right?

Experts see quantum technology as transformative, potentially revolutionizing fields like medicine, materials science, and finance. Some compare quantum technology’s potential to the internet or AI, suggesting it could fundamentally change how we work and innovate across various industries.

If these experts are correct, quantum technologies will create significant economic value worth potentially trillions of dollars within the next decade, revolutionizing every industry by offering faster and more accurate solutions.

No, No It’s Not.

On the other hand, quantum computing is still in the early stages of development, labeled by less optimistic experts as being at the lab-scale proof-of-concept stage. When (if?) quantum technologies advance to the next stage of development the ability to build large-scale, reliable quantum computers with low error rates will be a significant technical challenge. Maintaining the delicate quantum states of qubits will prove challenging too as this requires extremely cold temperatures and shielded environments.

While progress is being made, experts generally agree that widespread practical applications of quantum computing are still several years, if not a decade, away. As we navigate the next 10 years of quantum technological development, we must consider ethical implications such as the potential for misuse and the need for responsible development and regulation.

Other concerns include the incredible energy demands of quantum computing (especially the need for cryogenic cooling), the potential quantum computers have to break current encryption methods (posing a cybersecurity threat), and the lack of skilled quantum scientists and engineers.

What’s The Verdict?

¯\_(ツ)_/¯

There are so many variables, issues with semantics, and other subjective components to the question of the current and future states of quantum computing you could answer in an infinite number of ways and be right and wrong at the same time.

As of right now, the mere idea of quantum computing is already bringing disruption to the way we process information, a disruption that will intensify with each advancement researchers make. Said researchers remain busy knowing quantum technologies also bring myriad ways for us to address problems that even the most powerful classical supercomputers could never solve.

Advances in quantum computer design, fault-tolerant algorithms, and new fabrication technologies are making this technology a real program ready to outperform classical computation by a decade or two in some applications.