3 Common Pitfalls Of 802.11ax DVT (That Test Developers Should Avoid)

By Yuka Muto, LitePoint

802.11ax is the next evolution in Wi-Fi standards, and it promises to dramatically increase overall network capacity, especially in crowded urban environments — such as school campuses, airports, cafes, apartment complexes, and sports stadiums.

The focus of 802.11ax is not necessarily to enhance the speed of individual Wi-Fi devices, but instead, to optimize data throughput for all devices. In 2018, more 802.11ax chipsets are starting to become available in the market, and Wi-Fi device makers need to rapidly adopt and validate their new 802.11ax devices.

The transition from previous 802.11 standards, such as 802.11n and 802.11ac, to 802.11ax adds several new technologies and performance requirements that need to be fully tested. However, there are a number of challenges in designing these test scenarios.

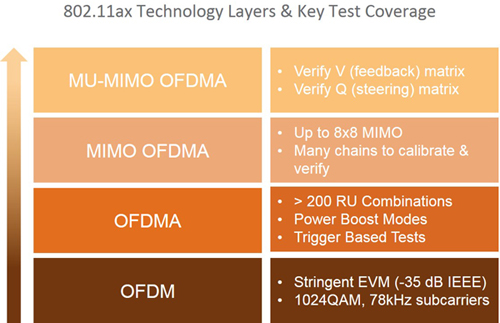

Pitfall #1 — Major Changes Between 802.11ac and 802.11ax

The first common design verification test (DVT) pitfall is underestimating the monumental changes between 802.11ac to 802.11ax. 802.11ax introduces Orthogonal Frequency Division Multiple Access (OFDMA) to Wi-Fi. Even though OFDMA builds upon Orthogonal Frequency Division Multiplexing (OFDM), OFDMA and its subsequent technology layers bring a completely new set of test requirements that are not compatible with OFDM, which was the basis of previous Wi-Fi standards.

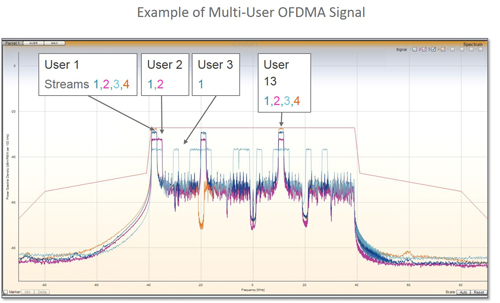

In OFDM, an access point (AP) serves one user — also known as a station (STA) — at a time, by dedicating a channel with all the subcarriers for a given period of time. OFDMA allows multiple users to divide and share a channel. The smallest sub-channel is called a resource unit (RU), and an AP can dynamically allocate RUs to efficiently serve all the users in its network. For example, a user who is streaming a high-definition video might get a larger RU, with more subchannels, than a user who is browsing a static webpage. This efficient use of spectrum improves overall network capacity.

Error vector magnitude (EVM) is the key metric in testing Wi-Fi devices to detect various imperfections in transmit quality. 1024QAM modulation now is a requirement in 802.11ax, and the standard specifies -35 dB EVM for the highest data rate, compared to the -32 dB EVM requirement in 802.11ac. Test instruments must have lower phase noise than those used for 802.11ac testing to meet this new, more stringent EVM requirement.

For OFDMA to work seamlessly, users need to be able to simultaneously share the same channel without causing excessive interference with other users. One challenge is that users will be located at varying distances from the AP, therefore stations could potentially receive power at significantly different levels.

For this reason, the AP must be able to boost or reduce the power of sub-channels to compensate for different path losses to multiple users. In the same manner, each user, or station, must be able to regulate its own power so that it doesn’t drown out other users who are sharing the same channel.

Received signal strength indicator (RSSI) accuracy also is important for precise power control to maximize range and throughput. Depending on accuracy, station devices can qualify as either Class A or Class B devices, and higher-accuracy devices (Class A) would get preference in channel allocation by APs.

Testing EVM of individual RUs is a new requirement in 802.11ax. For uplink signals, EVM of each RU must be good enough so that the AP can correctly demodulate the signal. Degradation of the signal might also interfere with other users, which results in lower throughput.

For downlink signals, the EVM of sub-channels dedicated to separate stations must be measured. The power levels of sub-channels could vary significantly, depending on the distance between the AP and the stations. To fully test an AP as a device under test (DUT) in DVT testing, sub-channel EVM must be measured with scenarios with different station distances.

Characterizing EVM vs. power also is important in 802.11ax, as digital pre-distortion will be used extensively. It is critical to measure EVM over a power range, not just at one power level, to ensure proper performance.

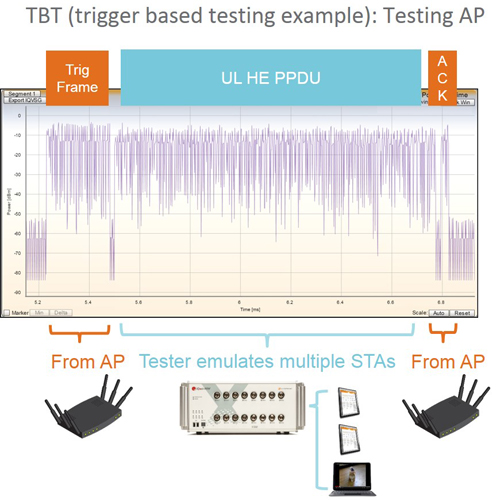

For multiple STAs to share the subchannels from the same AP at the same time, the AP needs to act like a base station or a conductor in an orchestra, and coordinate uplink transmissions from the STAs. To do so, an AP transmits a downlink control frame (called a trigger frame) to all of its users. The trigger frame solicits an uplink response from each user, called a high efficiency trigger PLCP protocol data unit (HE-TRIG PPDU).

After the AP receives the responses from the users, it sends an acknowledge (ACK) packet back to the users. To ensure packets from the stations reach the AP at the same time, the AP requires that each station’s clock is synchronized to that of the AP. Large carrier frequency offset (CFO) and timing errors would result in interference between users, which would in turn reduce network capacity.

Trigger-based testing can test either a station or an AP. A tester would emulate either mechanism; that is, it can act as an AP if testing a station, or act as a station, if testing an AP. As an AP, the tester would need to mimic a real-time communication with the station DUT to verify it transmits within 400 ns of short inter-frame space (SIFS), and synchronize to the AP’s clock within 350 Hz.

When testing an AP, the tester must be able to emulate stations that are close to the edges of specifications. By introducing impairments, test engineers can stress-test the AP DUT to verify its real-world performance. Test engineers may vary frequency offsets, and/or timing and power differences between multiple stations to rigorously test the AP and correlate the impairments with the AP’s behavior.

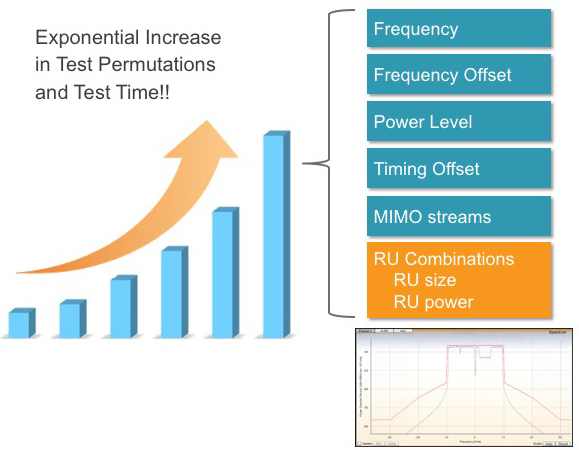

Pitfall #2 — 802.11ax Devices Need More Test Combinations

802.11ax DVT testing requires a lot more test combinations than previous generations of Wi-Fi testing. DVT experiments need to cover both 2.4 GHz and 5 GHz ranges. Frequency offset, power levels, and timing offsets need to be varied for each user to study the effect of impairments. Combining these permutations with hundreds of RU size and allocation combinations, and up to eight MIMO streams, we can expect to see a significant increase in test planning resources as well as test time, compared to 802.11ac DVT testing.

802.11ax is a new technology and still evolving in terms of available features. Chipset companies are continuously updating their firmware to implement and improve 802.11ax features, and device companies need to quickly adapt the changes and run regression tests on their devices. This increases visibility to the deviations at an earlier stage and reduces the overall risk.

With an increased frequency of test planning and execution, along with the significant number of test case permutations introduced in 802.11ax, DVT test engineers now are developing more massive and complex test experiments. Another 802.11ax DVT pitfall is the complexity of constructing these experiments under time pressure.

DVT testing now requires more systematic approach than simple manual testing. Experiments need to be repeated with the same test methodologies, and results must be compared in a consistent manner. Whether a test engineer is writing his/her own automated test program or relying on third-party software, the test engineer needs to simplify constructing a large DVT experiment by utilizing an automated test software approach to constructing test experiments.

Pitfall #3 — 802.11ax Produces Massive Data

When running a large number of tests, multiple times, over countless combinations of test parameters, it is inevitable that test engineers will end up with a substantial amount of raw data. Since the clearest way to study a lot of data is to visualize them in meaningful graphs, most test engineers use tools that allow creating plots from raw data.

Plots allow test engineers to get insight into the device performance, which helps with making adjustments to the design, as well as fine-tuning the next cycle of DVT experiments. However, when using generic spreadsheets to analyze 802.11ax data, data management and data manipulation tend to take up more time and effort, leaving less time for actual test engineering.

Given the increased number of tests, test cycles, and changing requirements, test engineers need to rapidly organize an enormous amount of data and create plots that reveal meaningful trends and critical faults in the hardware or software.

To efficiently analyze 802.11ax data, a more sophisticated data analysis tool is needed, instead of a generic spreadsheet. Whether a test engineer is writing his/her own data analysis tool or relying on a third-party analysis tool, it is important to select data analysis tools using these three criteria:

- Quick visualization of data,

- Maximizing engineering resources through automated recurring tasks, such as creating reports, and

- Flexibility in adapting to changing test configurations and parameters.

With the right analysis tool, test engineers should be able to quickly visualize data and gain insight into the device performance.

Avoiding 802.11ax DVT Testing Pitfalls

In this article, we discussed the three common test challenges of 802.11ax DVT testing: the new and more complex test schemes compared to 802.11ac, the increased permutations of test cases and test time, and the need for more sophisticated test analysis tool. To be truly ready for the next evolution of Wi-Fi standards, device makers must understand and adopt new test methodologies for their 802.11ax devices, use automated test software to efficiently and quickly create test flows, and select a smart data analysis tool upfront to increase test engineering productivity.

802.11ax promises to dramatically increase the overall network capacity and bring the much-anticipated optimized throughput in dense user environments. The expectation for 802.11ax is high, and so is the pressure to bring the products into market quickly. However, by planning for these changes in advance, device makers can ensure that the 802.11ax devices meet expected performance in a customer’s hands.

About The Author

Yuka Muto is a Product Manager at LitePoint, responsible for the company’s Wi-Fi and other connectivity test products, and comes from an extensive RF test engineering background. Prior to joining the product marketing team, she was an RF test engineer and a field application engineer who developed and supported automated RF test solutions for manufacturing lines. Yuka received her Bachelor of Science and Master of Science in Electrical Engineering from the University of Michigan.